Upgrade Acropolis Base Software (formerly known as NOS) to 4.5.1.2, which includes Foundation 3.0.1 in the CVM. I won't bother detailing the one-click upgrade process for Nutanix, as it is very easy and well documented elsewhere.

Backup any VMs that you wish to retain to a storage device outside of Nutanix, as we're going to blow away the existing Nutanix cluster in order to start from scratch.

If you want to keep data intact and convert ESXi to AHV one node at a time, you'll need to wait for Acropolis Base Software 4.6.

Once you're sure you've backed up all your important data, login to any CVM via SSH.

In the SSH terminal, issue a cluster stop command.

nutanix@NTNX-SERIALNUM-B-CVM:192.168.100.11:~$ cluster stop

2016-01-22 09:05:10 INFO cluster:1886 Executing action stop on SVMs 192.168.100.10,192.168.100.11,192.168.100.12,192.168.100.13

2016-01-22 09:05:10 INFO cluster:1895

***** CLUSTER NAME *****

MSP_NTNX

This operation will stop the Nutanix storage services and any VMs using Nutanix storage will become unavailable. Do you want to proceed? (Y/[N]): y

Waiting on 192.168.100.10 (Up) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp SysStatCollector Tunnel ClusterHealth

Waiting on 192.168.100.11 (Up, ZeusLeader) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp SysStatCollector Tunnel ClusterHealth

Waiting on 192.168.100.12 (Up) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp SysStatCollector Tunnel ClusterHealth

Waiting on 192.168.100.13 (Up) to stop: Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp SysStatCollector Tunnel ClusterHealth

Waiting on 192.168.100.10 (Up) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp SysStatCollector Tunnel ClusterHealth

Waiting on 192.168.100.11 (Up, ZeusLeader) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp SysStatCollector Tunnel

Waiting on 192.168.100.12 (Up) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp

Waiting on 192.168.100.13 (Up) to stop: Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp

Waiting on 192.168.100.10 (Up) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp

Waiting on 192.168.100.11 (Up, ZeusLeader) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp

Waiting on 192.168.100.12 (Up) to stop: Zeus Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos Snmp

Waiting on 192.168.100.13 (Up) to stop: Scavenger SSLTerminator SecureFileSync Acropolis Medusa DynamicRingChanger Pithos Stargate Cerebro Chronos Curator Prism CIM AlertManager Arithmos

Note: Your SSH session may drop during the cluster stop process. If so, just open a new SSH to a different CVM in your cluster.

Check to make sure the cluster has stopped. It's normal for Zeus and Scavenger to be up, but the rest of the cluster services should be down on all CVMs.

nutanix@NTNX-SERIALNUM-A-CVM:192.168.100.10:~$ cluster status

2016-01-22 09:06:44 INFO cluster:1886 Executing action status on SVMs 192.168.100.10,192.168.100.11,192.168.100.12,192.168.100.13

The state of the cluster: stop

Lockdown mode: Disabled

CVM: 192.168.100.10 Up

Zeus UP [4059, 4085, 4086, 4087, 4168, 4181]

Scavenger UP [4723, 4753, 4754, 4799]

SSLTerminator DOWN []

SecureFileSync DOWN []

Acropolis DOWN []

Medusa DOWN []

DynamicRingChanger DOWN []

Pithos DOWN []

Stargate DOWN []

Cerebro DOWN []

Chronos DOWN []

Curator DOWN []

Prism DOWN []

CIM DOWN []

AlertManager DOWN []

Arithmos DOWN []

Snmp DOWN []

SysStatCollector DOWN []

Tunnel DOWN []

ClusterHealth DOWN []

Janus DOWN []

NutanixGuestTools DOWN []

CVM: 192.168.100.11 Up, ZeusLeader

Zeus UP [3911, 3937, 3938, 3939, 4020, 4033]

Scavenger UP [4578, 4607, 4608, 4653]

SSLTerminator DOWN []

SecureFileSync DOWN []

Acropolis DOWN []

Medusa DOWN []

DynamicRingChanger DOWN []

Pithos DOWN []

Stargate DOWN []

Cerebro DOWN []

Chronos DOWN []

Curator DOWN []

Prism DOWN []

CIM DOWN []

AlertManager DOWN []

Arithmos DOWN []

Snmp DOWN []

SysStatCollector DOWN []

Tunnel DOWN []

ClusterHealth DOWN []

Janus DOWN []

NutanixGuestTools DOWN []

CVM: 192.168.100.12 Up

Zeus UP [3617, 3643, 3644, 3645, 3726, 3739]

Scavenger UP [4282, 4313, 4314, 4354]

SSLTerminator DOWN []

SecureFileSync DOWN []

Acropolis DOWN []

Medusa DOWN []

DynamicRingChanger DOWN []

Pithos DOWN []

Stargate DOWN []

Cerebro DOWN []

Chronos DOWN []

Curator DOWN []

Prism DOWN []

CIM DOWN []

AlertManager DOWN []

Arithmos DOWN []

Snmp DOWN []

SysStatCollector DOWN []

Tunnel DOWN []

ClusterHealth DOWN []

Janus DOWN []

NutanixGuestTools DOWN []

CVM: 192.168.100.13 Up

Scavenger UP [3518, 3549, 3550, 3589]

SSLTerminator DOWN []

SecureFileSync DOWN []

Acropolis DOWN []

Medusa DOWN []

DynamicRingChanger DOWN []

Pithos DOWN []

Stargate DOWN []

Cerebro DOWN []

Chronos DOWN []

Curator DOWN []

Prism DOWN []

CIM DOWN []

AlertManager DOWN []

Arithmos DOWN []

Snmp DOWN []

SysStatCollector DOWN []

Tunnel DOWN []

ClusterHealth DOWN []

Janus DOWN []

NutanixGuestTools DOWN []

2016-01-22 09:06:47 INFO cluster:1993 Success!

One last time...make sure you've backed up anything on the Nutanix cluster that you wish to retain - VMs, templates, etc. If you forgot something you're going to need to start the cluster again (e.g cluster start).

In the SSH terminal, issue a cluster destroy command.

nutanix@NTNX-SERIALNUM-A-CVM:192.168.100.10:~$ cluster destroy

2016-01-22 09:07:04 INFO cluster:1886 Executing action destroy on SVMs 192.168.100.10,192.168.100.11,192.168.100.12,192.168.100.13

2016-01-22 09:07:04 INFO cluster:1895

***** CLUSTER NAME *****

MSP_NTNX

This operation will completely erase all data and all metadata, and each node will no longer belong to a cluster. Do you want to proceed? (Y/[N]): Y

2016-01-22 09:07:24 INFO cluster:935 Cluster destroy initiated by ssh client IP: 192.168.140.62

2016-01-22 09:07:28 INFO cluster:418 Restarted Genesis on 192.168.100.11.

2016-01-22 09:07:28 INFO cluster:418 Restarted Genesis on 192.168.100.10.

2016-01-22 09:07:28 INFO cluster:418 Restarted Genesis on 192.168.100.13.

2016-01-22 09:07:28 INFO cluster:418 Restarted Genesis on 192.168.100.12.

2016-01-22 09:07:28 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.10', u'192.168.100.11', u'192.168.100.12', u'192.168.100.13']

2016-01-22 09:07:31 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:33 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:36 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:39 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:41 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:44 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:47 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:50 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:52 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:55 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:07:58 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:01 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:03 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:06 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:09 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:12 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:14 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.11', u'192.168.100.10', u'192.168.100.13', u'192.168.100.12']

2016-01-22 09:08:17 INFO cluster:325 Checking for /home/nutanix/.node_unconfigure to disappear on ips [u'192.168.100.10']

2016-01-22 09:08:18 INFO cluster:1993 Success!

nutanix@NTNX-SERIALNUM-A-CVM:192.168.100.10:~$

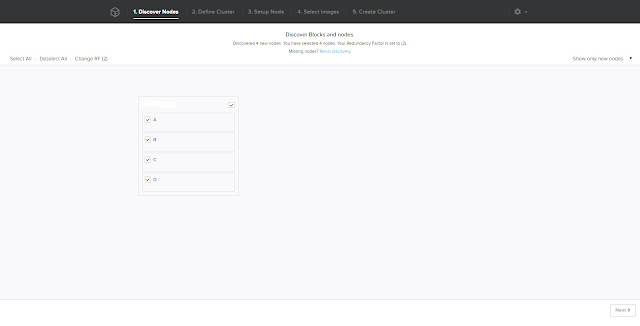

Once the cluster is destroyed, point your web browser to a CVM IP address on port 8000. For example: 192.168.100.10:8000/gui

This will take you to Foundation 3.0.1, which is now running inside the CVM rather than on a Linux workstation on your laptop.

See Converting ESXi to Acropolis Hypervisor - Part 2 - Foundation 3.0 for the next steps

See Converting ESXi to Acropolis Hypervisor - Part 2 - Foundation 3.0 for the next steps